Coffee Crisis is a neo-rogue brawler that puts you in the shoes of the only baristas on Earth with enough heavy metal in their veins to fend off an alien assault. Play solo or join up with a friend to fight across eight unique locations ranging from your Coffee House HQ to the far reaches of outer space. Go up against an army of wild alien enemies, and the humans they have brainwashed with a variety of weapons, special moves, suplexes, and coffee!

The Smurglian race has come to Earth and they're not leaving until they steal our three most prized commodities: heavy metal, free WiFi, and our coffee. Crunch through fistfuls of alien meat as you stop their invasion, and enjoy random modifiers to the enemies and action on each playthrough. It's a unique cup of beat 'em up action every time you play!

As part of our feature set of Coffee Crisis, we implemented Chroma integration into our game. The game was created for PC/Xbox One using Unity3D, a closed-source but critically acclaimed video game engine created by Unity Technologies, applauded and used by Indie and professional game developers worldwide. To add Chroma integration into Coffee Crisis, we used the official Chroma and Chroma Native Unity3D SDKs. For our game, we utilized Chroma for showing colorific animations on the Blackwidow Chroma Keyboard in cutscenes and menus, and on encoding gameplay information. Specifically, we implemented the following:

- Encoding pain/powerup pickup

- Every time the player

- Is hit

- All other keys unused by other bullet points flash red

- Picks up a powerup

- All other keys unused by other bullet points flash yellow

This guide will show aspiring Indie video game developers how to set up and use the Unity3D Chroma plugins for game development, as well as show an exclusive behind-the-scenes look on how we implemented the Chroma functionality in Coffee Crisis!

- Installing Razer Synapse for development

Before you can even use a Blackwidow Chroma keyboard for development, you must plug it into your computer for the first time. It goes without saying, but Chroma development will only work for Chroma-enabled Blackwidow keyboards. Certain Blackwidow keyboards do not include Chroma support; please check your user's manual.

Plugging in your Chroma Blackwidow keyboard

Plug in your Chroma Blackwidow keyboard into your computer. Some Blackwidow keyboards have 2 USB cords; one as a piggyback cable, another for the keyboard. Make sure at a minimum to connect the USB cable labeled "Razer" and not "Port". After plugging in your Chroma Blackwidow keyboard, the keyboard's drivers should install from online, and it should light up. In order to control the lighting and handle it in code, you will next need to install Razer Synapse software.

Installing Razer Synapse for development

Razer Synapse is a piece of software created from Razer which will allow you to configure the Chroma lighting on your Chroma-enabled devices, as well as interface with the lighting with code (after we implement code and the Unity Chroma plugins).

To begin, download and install Razer Synapse. At the time of this writing, the latest version is Razer Synapse 3 Beta, while the previous is Razer Synapse 2. Download and install either version (for this guide, we'll use Razer Synapse 3 Beta). After launching the installer, select which features you want to be installed, and where to install it. At a minimum, you will want to install Razer Synapse software and Chroma.

- Installing UnityChroma SDK

Now that we have both Razer Synapse installed and the drivers installed with the Razer Chroma Keyboard (via plugging it in), it is time to set up our Unity project to interface with Chroma!

In order for a Unity project to interface with Chroma devices, you will need to install the Unity3D Native and non-native plugins for Chroma SDK. You can find the native plugins here, and the non-native here. The former plugins are required for usage in compiled games (they interface with the hardware), while the latter plugins are used in the Editor only for creating Chroma animations. The latter ones should be removed for compiled builds, to reduce filesize bloat in the compiled builds. Please read the instructions from each download page on how to use the plugins and install them.

- Creating animations

With the keyboard's drivers installed, Razer Synapse installed, and the Unity3D Razer plugins installed into your project, you should be good to go for developing with Chroma! Below are a few conceptual notes on how Chroma works in Unity. Full-details on how to use Chroma with Unity can be found in the tutorials on the pages where you downloaded the Unity3D Chroma plugins.

Chroma color with the Unity3D plugins works in either one of 2 ways: via Chroma animations, or by manually updating the colors of keys on-the-fly. The former method will be discussed in this section, while the latter in the next section. Chroma animations should not be confused with Unity's animations. The latter is used for changing properties of a GameObject's components overtime via keyframes (especially sprites and image graphics), while the former is used for changing the colors of Chroma-enabled devices over time.

Chroma animations are simply .chroma filetypes, stored in the StreamingAssets folder within the Assets folder in the root folder of the project. Unfortunately, Chroma devices are only supported on Windows machines, so when compiling the game for other platforms (Mac and Linux), you should remove the ChromaSDK plugins, UnityNativeChromaSDK plugins, and the StreamingAssets folder with the Chroma animations, since they will bloat up the build's filesize and are unused for those platforms.

To create Chroma animation, go to GameObject>ChromaSDK>Create 1D/2D Animation. For Keyboard animations, we will want to use 2D Animations. The Chroma animations should be placed in your StreamingAssets folder.

In order to edit Chroma animations, right-click the animation, and click ChromaSDK>Edit Chroma Animation. This will bring up a custom Chroma Editor in the Inspector.

In the custom editor, you can select the device type ("Keyboard" for this tutorial), select key types from the keyboard, and assign colors to each key in each frame in the animation. You can also import an image to assign colors to each key (however, this feature seems to crash often). When modifying colors, the Chroma devices attached to the computer will update to display your color configuration.

Alternatively, to individually assigning colors to each key, you can use the Chroma capture tools to map an image onto the various keys. To use these tools, Goto Windows>ChromaSDK>Open Chroma Particle Window. To learn how to use these capture windows, check out the tutorials here.

Animation playback

Now that we have our animations defined for the game, we will need to add code to playback our animation. Any Unity3D script that utilizes the native Chroma plugins will need this namespace added to the top of the script:

#if UNITY_STANDALONE_WIN

using ChromaSDK;

#endif

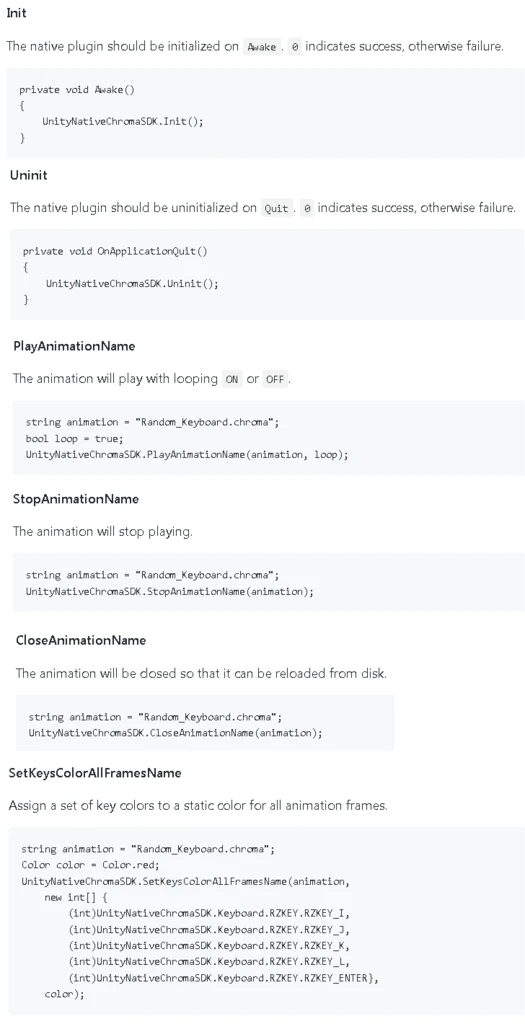

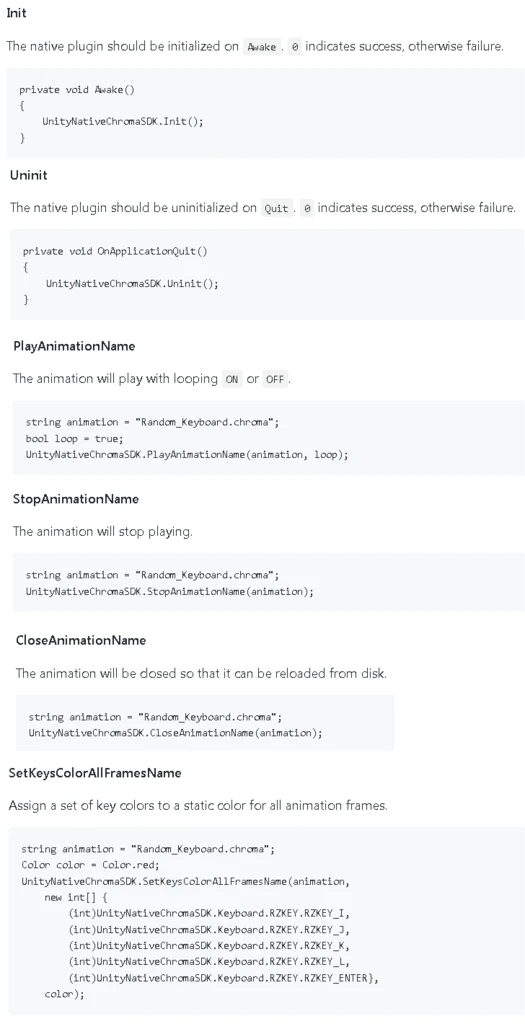

Furthermore, Chroma support is only supported in the Windows platform, so make sure to add #if UNITY_STANDALONE_WIN code blocks around Chroma code. Below is a quote from the API documentation here for the most important API functions for playback:

- Testing on real/emulated hardware

Now that we have animations defined and code for playing the animations, you will want to test them on hardware. You can either test it as such on real hardware (by playing the game with the Chroma device connected) or with the Chroma Emulator. The Chroma emulator will simulate Chroma lighting for a variety of devices. At a bare minimum, you will need one real Chroma device setup first before it can be used. Usage should be straightforward.

- Behind the scenes look on Coffee Chroma Integration

A limitation of the Unity Chroma setup is that animations are static; it doesn't really allow for combing several animations to encode data. When designing the in-game Chroma animation, we wanted to encode most of the game's information on the keyboard. Luckily, we were able to exploit a few things in the Unity Chroma set up as a workaround and allow dynamic key lighting. Coffee Crisis' in-game Chroma animation is simply a dummy Keyboard animation with no keys lit.

We heavily utilize the SetKeysColorAllFramesName API function in order to manually update the keys in an Update() thread; however, there is a catch to this method. In order to edit an animation during runtime, you must do the following:

- Close it first with CloseAnimationName

- Manually change your keys' colors with SetKeysColorAllFramesName

- Play dynamic animation back with PlayAnimationName

This sequence of code events was what allowed us to pull off dynamic animations in-game. A problem with using an update() thread to update dynamic Chroma animation in such a fashion is that it may lag other people's machines. Different models of Chroma keyboards have higher fresh rates, and running such an update thread at full speed will severely lag other people's machines. As a workaround to this issue, we added a cooldown timer, to update dynamic Chroma animations every 250ms. Please see our truncated, relevant code attached, for a sample of how we set up dynamic Chroma animations to work.