Game Development

Phantom Gear: Facing the Force

Even the most battle hardened heroes need enemies to blast, and Phantom Gear’s protagonist, Josephine, will be no different. After all, what kind of game would an action platformer be without any foes to shoot at? Thankfully, Josephine is only clashing with a single organization within the game’s story, the Ocular Force, and it’s up to her to recover a piece of an energy source that can be the solution to their world’s problems.

However, the Ocular Force is no slouch in their numbers, and there are plenty of mooks that Josephine has to face. Today, we’ll be taking a look at all these disposable bags of flesh and hunkering constructs of metal that Josephine has to deal with if she ever hopes to take back from them what they stole.

UE4 Performance Indicators and Solutions

This doc outlines the primary performance indicators in the context of Unreal Engine, including how to measure them, and suggestions on addressing performance problems in each area.

Reduce Draw Calls

Draw calls are typically one of the most expensive parts of rendering a complex scene. This is among the first things you want to look into reducing as much as possible.

How to Measure

Use the console command stat RHI and check “DrawPrimitive calls” under the “Counters” category.

Use the console command stat scenerendering and check “Mesh Draw calls” under the “Counters” category. Unlike the “DrawPrimitive calls” counter, this counter will only show draw calls from meshes.

Hierarchical Instanced Static Meshes

Meshes that are rendered as a part of an instanced static mesh component (ISMC) or hierarchical instanced static mesh component (HISMC) will share draw calls. The difference between the ISMC and HISMC is that HISMCs support rendering different LODs for its instances at once, whereas with ISMC all rendered instances share the same LOD. For this reason, HISMC should be preferred, but in either case, there will be a draw call reduction. Note: since UE 4.22, with some limitations, the engine can automatically merge identical static meshes with the same materials and material uniform parameters into shared draw calls, similar to instanced meshing. However, this automation is limited to the DX11 feature set, so others such as DX12 or earlier than DX11 can’t receive this benefit (read more about this under “Draw Call Merging” here UE4 doc: Mesh Drawing Pipeline). For this reason, I will still recommend using instanced.

Foliage Paint Assets Where Possible

If you place static meshes using the foliage painter, each type of mesh painted will be rendered similarly as though they are all rendered through a hierarchical instanced static mesh component (HISMC). This is a simpler option than managing separate Actors interacting with a shared HISMC to add/remove themselves from the HISMC pool as needed. However, with this option, each placed static mesh is not its own Actor, reducing simulation/interaction options for gameplay purposes. Additionally, addition/removal of instanced static meshes through the foliage painter is only available at editor time, not runtime. As a result, this method should be preferred when you’re placing many common static meshes for set-dressing which do not need any C++/BP functionality built into them.

Reduce Material Slots for Meshes

Each material slot on a mesh will increase its draw call count. For clarity, material slots are different from texture uniform reference. Material slots are where you assign material instances. Material slot count is determined on the artist’s side when they design the mesh and determine which parts of the mesh will use different materials. While you can’t change this in-engine, you can check your mesh assets in-engine to determine if they have excessive material slots, and work with your artists to get them optimized to use less material slots. The most general rule of thumb is that the simpler the object, the fewer material slots it should have. For example, a rock should have one material slot, and a wood + metal bench could have two (one for the wood parts to share, and one for the metal parts to share). An example of an excessive material slot setup would be if each piece of wood or metal on the bench had its own material slot, resulting in a total of 8 material slots.

Actor Merging

Unreal is capable of merging multiple selected actors within a level into a single new actor. The main benefit to this feature is reducing draw calls. See their documentation here: UE4 docs: Actor Merging. Actor merging can be carried out in a couple ways. The first way will effectively create a new mesh (with new UVs) and even make a single material which is all the selected actor’s materials combined. This allows you to directly convert multiple draw calls caused by multiple actors into a single draw call.

Actor merging also supports merging multiple actors into using one instanced mesh component. This is helpful for cases where you have many copies of the same static mesh actor.

Reduce Rendered Tri Count

How to Measure

Use the console command stat RHI and check “Triangles drawn” under the “Counters” category.

Creating Mesh LODs

Creating different mesh LODs (levels of detail) will cause the mesh to render with reduced geometry complexity depending on its size as a percentage of the screen. Unreal can automatically generate LODs for your meshes with some simple configuration within the mesh’s asset. This is one of the simplest ways to reduce the number of rendered triangles.

Resources:

Cull Distance Volumes

Culling distance volume actors allow fine-grained control over the culling the visibility of any actors within the volume based upon their size and distance from the camera. The amount of a tri draw reduction you can get from this depends on the use case. They are especially good for wide open environments with a range of object sizes.

Resources:

Reduce Overdraw

Overdraw describes how often texels (pixels in the context of textures) are written to within a framebuffer in the scope of one frame. When rendering a scene with opaque materials, texels will usually be written to once. Texels are often written to many more times than once usually as a result of translucent or additive materials overlapping. For example, many fog particles overlapping result in the overlapped texels being written to many times, which is measured as a high amount of overdraw. Overdraw is most commonly a problem with foliage (which use the foliage card technique, in which you have a foliage texture on an alpha translucent material) and particle systems.

Use Overdraw View Mode to Identify

Reducing Overdraw

The method to reduce overdraw is highly dependent on the specific cause of overdraw (for example, foliage, particle systems, or something else). This example looks at particle systems.

Shown above is a sample particle system producing a large amount of overdraw with its additive particles. A common cause of frame rates dipping down is when particle systems are spawned which produce a lot of quad overdraw. Quad overdraw is difficult to avoid when making some kinds of particle systems, and some amount of it is okay for these use cases if it’s temporary. However, in order to reduce quad overdraw, effort should be made to reduce the amount of particles needed at once for these systems. Sometimes, this can be achieved without much change to the visuals of the system by reducing the quantity of the particles, and then increasing the opacity of the particles to compensate for the reduced particle quantity.

Reduce Lighting Complexity

Lighting complexity increases as dynamic light sources overlap. This is a significant source of performance cost from dynamic lights. Below is a sample scene with 3 point lights with different attenuation radius. We’ll then look at it with Light Complexity view mode.

Reducing Lighting Complexity - Attenuation and Avoiding Overlap

The difference in settings between all of these lights is just attenuation - no difference in intensity. The key takeaway is that the light’s attenuation setting is the factor which affects the radius in which it imposes a performance cost. Light intensity does not have an affect on this. As a result, make sure each dynamic light’s attenuation is proportional to how intense the light is and how much area it should visibly affect. As dynamic light sources move around, a smaller attenuation radius will reduce the amount they will overlap on average. You can see that where the 3 lights overlap, the complexity is the worst (the purple region in the center). In environments with dynamic lights, spacing them out to reduce overlap between their attenuations will reduce light complexity. Consider outright removing dynamic lights in cases with excessive light complexity.

Reduce Skeletal Mesh Animation Costs

Skeletal meshes contribute a significant amount to game-thread costs because they must tick and update on the CPU, on top of having to be rendered like a typical mesh on the render-thread. The methods outlined here are about measuring and reducing their performance impact on the game-thread (CPU).

Measuring Skeletal Mesh Costs

To quickly see how much skeletal meshes are contributing to game-thread costs, use the stat command stat anim. From here, you can see how much your skeletal meshes are taking to Tick each frame.

Budgeted Skeletons

The Animation Budget Allocator (ABA) allows you to define game-thread cost limits collectively for skeletons which interact with the ABA. You can have skeletal mesh components opt-in to the ABA system by replacing them with the type “Skeletal Mesh Component Budgeted”. The ABA will reduce animation quality for budgeted skeletons dynamically based upon current animation performance, and the way it reduces quality is configurable. For example, dynamically reducing component tick rate, and/or removing pose interpolation. It will apply these optimizations based upon the calculated “significance” of skeletal meshes. For example, skeletal meshes that are further away from the camera can have lower significance, and thus be subject to much more optimization than skeletons close to the camera. With the C++ API for the ABA, you can define your own custom significance function to suit your use case’s requirements.

This should be the first optimization effort for your typical use cases of skeletal meshes, especially with how flexibly you can push the optimization with it.

Read more about animation budgeting in the UE4 docs: Animation Budget Allocator

Vertex Animation Textures

Vertex Animation Textures (VATS) are textures within which all necessary data from a skeletal mesh’s animations are baked. This can include skeletal mesh LODs as well. The VATs are then rendered through static meshes, resulting in a significant performance improvement over skeletal meshes. Downsides to this method are that animation events and any tech achieved through animation graphs (such as anim blending) are not available. The robustness of the featureset depends on how the VAT baking and material functions which use it are implemented. There are a few plugins out there which implement them in a feature-rich way. This is one such plugin: Marketplace: Vertex Animation Toolset. VATs are ideal to use instead of skeletal meshes for animated actors which play a small/decorative role in gameplay and therefore don’t need the full feature set that comes with a skeletal mesh, such as environment creatures like birds or frogs. VATs are practically mandatory for cases where you need to render an extremely large amount of animated entities (5000+) of any complexity, such as in crowd simulation, in tandem with Hierarchical Instanced Static Mesh components.

Usage of Tick()

Many gameplay features can be implemented as a part of an Actor’s Tick(), but using Tick is the most expensive option as they run every single frame by default. A common cause of performance issues I’ve seen in several medium to large scope games is that most actors implement Tick(). While the tick itself only takes a fraction of a millisecond, if nearly all actors in the game use Tick(), those fractions of a millisecond end up adding up to a lot - sometimes most of the frame budget. As a result, overuse of Ticking is considered a death by a thousand cuts.

Consider these alternatives instead of using Tick. Tick should be the last resort for implementing functionality.

- Event-driven systems (In C++, this is using Delegates. In blueprints this is using event dispatchers)

- Timers

- Actor Timelines

If you feel like Tick is still the best way to implement a feature, consider reducing the Tick Interval of the Actor. Usually, ticking once per frame is excessive (which is 60 times a second at 60 fps) - sometimes for example 5 times a second is enough. Ticking 5 times per second is 12x less expensive than 60 times per second.

Checking for Prevalence of Tick()

During runtime use the console commands stat startfile and stat stopfile to begin and end a performance profiling session. This will create a UE stats file which you can load in the profiler GUI. One place to open this data is the “Session Frontend” window, accessed from Window -> Developer Tools in the toolbar. See this page for reference on using the profiler: UE4 doc: Profiler Tool Reference. Once the profiler data is loaded in the GUI. Inspect it to see how much of your costs are collectively coming from your Tick() implementations across your class. For most games, Ticking should be a minority of game thread costs.

Reduce Shader Complexity

Shader complexity is defined as the amount of instructions a shader/material needs to execute. The more instructions, the higher the complexity, and the more expensive it is to render. Many materials in a game will typically have low complexity, but sometimes high complexity materials crop up, or many low-complexity shaders overlap due to translucency, adding up to high shader complexity for the covered pixels.

Identifying Shader Complexity Issues

Use the shader complexity view mode (hotkey Alt + 8) to switch the view mode to shader complexity.

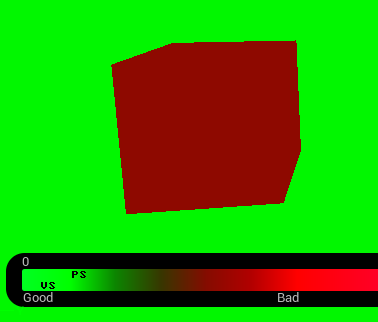

Above are examples of two cases where shader complexity is high. An opaque cube with a high complexity material (467 instructions), and a particle system with many overlapping low complexity translucent materials (60 instructions). You can read more about the view mode here: UE4 docs: Shader complexity view mode

Reducing Shader Complexity

For the particle system, the heat map looks similar to the quad overdraw view mode. The shader complexity in the case of the particle system is so high specifically because of the amount of quad overdraw (overlapping particle billboards, in this case). Optimizing the shader complexity of the particle material would be one angle of improving the shader complexity of the particle system, as would be reducing the amount of quad overdraw (see the section on Overdraw in this doc).

Reducing shader complexity for opaque materials like in the case of the above example cube requires modifying the material. The way this is to be done depends on why the material is so expensive. You can experiment with reducing parts of the material, recompiling it, and checking the instruction count in the material’s output stats window to see the amount the instruction count has changed by.

Types of commonly problematic content to check for high shader complexity in the context of a level include grass foliage, and situations in which there are many particle systems layering, such as during combat, or when environmental vfx are in play, like in a rainy environment.

Using Blueprints Effectively For Iteration

A Comparison on the Advantages of C++ Compared to Blueprints

Introduction

Unreal Engine provides blueprints as an effective way of quickly iterating on concepts without having to wait for lengthy C++ compilation times between changes. Blueprints also provide a way for designers to access C++ implemented functionality in a visual way, so they can participate in iterating on gameplay without knowing how to code.

Because of these strengths, Blueprints are best to iterate on ideas in their earliest stages. Compared to C++, Blueprints run into inherent maintainability problems as a result of their medium. Blueprints are more difficult to analyze, debug, and navigate compared to C++ code, because the C++ Visual Studio environment (with extensions) altogether has an incredibly powerful feature set which is not matched in Blueprints. A less experienced C++ developer without knowledge of these features and options might think Blueprints are the same if not better in those regards, and this is why this document exists.

This is the only time in the entire doc performance will be mentioned, to keep the matter simple and in Blueprint’s favor. Even if Blueprints are just as fast in runtime execution as C++, we should still have nearly all gameplay implementations start in Blueprint, then implemented in C++ after iteration. While I don’t believe Blueprints are as fast as C++ due to having to work on a separate VM layer (the time cost happens when switching in and out of VM context, and moving data in and out of the VM) let’s just say for the purpose of this document, runtime performance does not matter.

This is from a perspective of someone who has used blueprints in projects for 3 years, and C++ for about 7 years. A later section of this doc gets into it, but I also want to highlight that data-only blueprints have incredible value and should be a part of our projects, because they are the cleanest way to get asset references into C++. However, this document is about code implementation. The following sections get into the capabilities of debugging and navigation, as well as implementation time cost comparisons between C++ and Blueprints.

Analysis and Troubleshooting of BP compared to C++

Much of the process of fixing bugs involves analyzing the flow of control throughout the scope of runtime execution, and usually monitoring the state of data in one or more objects as code is executed. Doing this in the context of C++, there are many well established methods and tools provided. Some of these have equivalents in BP, but many do not. Additionally, the debugging features that are in both are less flexible and powerful in BP.

Debugging - Breakpoints

C++ and Blueprints both support breakpoints (pausing execution at a given line of code or node), and they are valuable for most debugging use cases. C++’s breakpoints with Visual Studio are incredibly powerful compared to Blueprint’s breakpoints.

- Breakpoint conditions: C++’s breakpoints in VS support “conditions”. That is, the breakpoint will only trip if a given expression that you program into the breakpoint evaluates to true. For example, if (xObj.dataMember >= y), then use this breakpoint. Notably, these breakpoint conditions can be added and removed without recompiling, so they can be added as needed in the middle of a debugging session. This is useful for debugging code which runs extremely frequently, and only rarely exhibits a bug, so you can add a condition to your breakpoint to make it only trip when the conditions you define are met. Link to breakpoint conditions VS documentation

- Data breakpoints: C++ in VS supports a very powerful feature called “data breakpoints”. Data breakpoints allow you to pause execution whenever data at any chosen memory address changes. This is incredibly powerful for debugging an entire category of bugs, in which data is being changed unexpectedly, and/or you’re not sure where the modification is happening from. Once a data breakpoint is hit, you can inspect the stack trace to see where the data write has originated from. This is useful to determine the cause of bugs for cases where the affected data is modified from so many different locations, to the extent it is not practical to use normal breakpoints at each line of code it is modified from, especially if those contexts are called frequently for purposes other than modifying the affected data, and most importantly, to potentially find an unknown source of data writes. Link to C++ data breakpoints VS documentation

- Breakpoint overview: In C++, you also get a breakpoint overview where you can see, search, and toggle all of your breakpoints throughout the entire codebase from one UI location. This is effective in cases where you need different breakpoints for different situations within a debugging session, and switching between sets of breakpoints quickly is needed for fast debugging. In comparison within blueprints, there exists no such control-table overview of all breakpoints - you’d have to manually go back through all the blueprints, find the nodes the breakpoints were on, re-enable them, and disable the old ones.

Debugging - Watches

Both C++ and Blueprints have “Watches'' as a feature - in which you can monitor some data or state while execution is halted, but again, C++ in VS has much greater capabilities with its watch functionality. In VS C++, you can watch the state of many objects across the scope of multiple systems, including the contents of data containers, where each element and its data members can be inspected freely. Blueprints also have a “Watch”, but it is only valid for variables that are local to the current blueprint context. Using Watches effectively is another critical part of debugging many types of issues, so having a worse form of “Watch” in blueprints makes the typical debugging experience more challenging than it needs to be.

Preventative / Proactive Debugging - Static Analysis

Something C++ has which Blueprints don’t is the ability to leverage static analysis tooling. Static analysis results are very similar to compiler warnings, but static analysis tools can algorithmically find the places in your code where run-time errors can or will occur, and tell you about them. In this way, static analysis tools allow you to automatically find many categories of bugs (often, the worst kinds of bugs) without anyone having to play the game or use the software. The types of bugs static analysis tools can detect are things like uninitialized data access (like accessing a null reference), undefined behavior, memory leaks (if not using Unreal’s memory management for parts of the project), stack overflows, infinite loops (unintentionally stuck in while or for loops), numeric type overflows and underflows, array overruns and underruns, and much more. At any scale, static analysis can offer value to code quality and stability by pre-emptively detecting errors, similar to having an experienced developer review the code and point out places where there might be issues, or where there will certainly be issues. The value static analysis provides increases with the scale and complexity of the project. This post on the Unreal Engine site goes into some detail about the value of static analysis specifically in the context of Unreal Engine game development. A notable quote about static analysis is that John Carmack, the CTO of Oculus VR went on record saying “The most important thing I have done as a programmer in recent years is to aggressively pursue static code analysis.” Given that blueprints cannot leverage static analysis tools, that puts it at a severe disadvantage for maintainability at scale.

Code Navigation

Speed of navigating code (or nodes) is important when evaluating code, whether you’re analyzing it while debugging it, or remembering how a system works before you modify or extend it, or while visiting many sections of code during a refactoring session. C++ is substantially faster and more versatile to navigate than blueprints with the right tools and training. These tools are standard for UE4 developers, such as Visual Assist or Resharper C++. They are so unanimously used across UE4 developers, they both have UE4-specific features and settings within them, although their best features are universal to C++. The faster code can be navigated, the faster it is to analyze and make progress on. Both C++ and blueprints have a few common navigation use cases covered like “Find all references”, but Visual Studio and third-party tools provide even greater and more flexible options than blueprints. Here’s an example use case to demonstrate the power of a couple navigation options in C++ which I use constantly while working in C++ and C#, and miss whenever I work in Blueprints. There are many more, but

C++ Navigation - Symbol Search

Imagine I want to find the class type of a data member in a “PlayerCharacter” class called “characterMesh” - not sure if it’s a static mesh, instanced static mesh, skeletal mesh, and so on. From anywhere in the entire codebase, in C++ with Resharper or Visual Assist, you could use Alt-Shift-S, type “char mesh” and press enter, then you will be instantly looking where the data member “characterMesh” was defined, in the PlayerCharacter class, where you can see its class type. If multiple things matched the filter of “char mesh” you would be able to see them in the context window and where they are defined (such as file name) and pick the one you intended before pressing enter. This is called a “symbol search” - it allows you to search the entire codebase for symbols (identifiers) which match your filter. This is also very useful for finding things named after something. For example, symbol searching “weapon mod” would allow you to find all classes, methods, data members, and so on with “weapon” AND “mod” anywhere in their name, viewable in an organized list with file names, and preview each line the matching code was found in. Clicking any of them would take you to that line. The closest thing a blueprint workflow has is the ability to search all UE4 assets by pressing alt-O, and searching for a blueprint by name there, then opening it, and then searching for the variable by scrolling through the BP’s details.

C++ Navigation - Peek Definition

In C++, you can use the “Peek Definition” shortcut while selecting any identifier/symbol in code to pop up an overlay to see the code context of where that identifier was defined without leaving your current code context. This allows you to see both how and where it was defined at a glance, with even the ability to scroll through and modify code in the “Peek Definition” overlay, and quickly close out the peek overlay by pressing Esc key. For example, if I was working with an object of the “PlayerCharacter” class and saw code reference it’s “characterMesh” member like so: playerChar.characterMesh, and I wanted to quickly see what other data members are in that class besides “characterMesh”, I would just have to use the “Peek Definition” hotkey on the word “characterMesh” to immediately jump to where it was defined in the PlayerCharacter class, then press esc whenever I want to close the peek overlay to get back the full view of the code I was originally looking at. This allows for rapid context switching to get information about whatever it is you’re working on.

Summary of C++ Navigation

The above two navigation features are just a couple of many navigation features I use in C++ that don’t have a good equivalent in Blueprints. The visual assist page here Visual Assist - Navigation Features shows and explains many navigation features in just that extension alone, such as GoTo related symbol, Find by Context, and bookmarking code locations with VA Hashtags to rapidly jump to at a later time.

Code Creation Time

C++ is substantially faster to create than using Blueprints. Here are some tangible examples.

Defining Functions / Methods

Things like function definitions in blueprints have to be created by using a bunch of drop-lists, buttons, and text fields. For example, just adding an input parameter to a function requires clicking a drop list to pick a data type from a list, then picking whether it’s an array, map, etc, from another drop list, then entering the parameter name in a text field. In C++, adding a parameter is as simple as typing it into the method’s declaration, for example,

TArray<UPlayerCharacter*> & characters as a parameter is a reference to an array of pointers to UPlayerCharacter type.

Implementing calculations

Take this example calculation for determining a player’s maxHP stat, in C++:

player.maxHP = player.vitality * 10 + player.level * 5;

The equivalent in blueprints is:

1 player node.

3 “get” nodes from the player node, using three pins to get maxHP, vitality, and level variables as nodes.

2 multiply nodes, each with two input pins.

1 addition node with two inputs (each coming from the multiply nodes).

1 set node to write set the result of the addition node to player.maxHP, which has an input pin using the maxHP variable as its target, and another input pin for the value the variable should have.

8 nodes (1 player node, 2 multiply nodes, 1 add node, 1 set node, 1 get maxHP node, 1 get vitality node, 1 get level node) have to be added, and (3 get pins + 4 multiply input pins + 2 addition input pins + 1 set value pin + 1 set “target” pin) = 11 pin connections have to be made in order to do the same as that one simple line of code. The fastest part of that process would be quickly adding the multiply and addition nodes by pressing the “m” and “a” keys as shortcuts. Additionally, in blueprints, you have to take some care to line up the nodes in a way that physically makes sense to be readable. Measuring the amount of time it takes to write the line of C++ code, it took me about 7 seconds. That timing is not close to possible in blueprints. It’s certainly possible there are more efficient ways of implementing that calculation in blueprints, but the time it would take to determine the most node-and-pin minimalistic approach already makes it cost more than the C++ implementation, and the sheer amount of clicks and dragging (as well as going through the list repeatedly for which variable to get from a node) would guarantee it would always take more than the handful of seconds the C++ version takes.

Conclusion

Blueprints and C++ each have very different strengths and weaknesses, to the point that they shouldn’t be seen as interchangeable tools. For clarity up front, I think all of our Unreal projects should use Blueprints, but exclusively just to their strengths and no more past that. Blueprint’s key strengths are that data-only blueprints provide the best way to get asset references and other data definitions into C++ in a maintainable way, and blueprints are fast to prototype with. Just in terms of early iteration times, Blueprints are faster in development than C++ because the recompile time is very fast, unlike C++’s very slow compilation. On the other hand, C++ is much easier to debug due to a rich set of debug and navigation tools and options. A C++ codebase is also easier for an experienced developer to learn and become accustomed to as a result of the navigational options, and faster to create new code within due to not having to mess with a bunch of nodes and pins to write simple expressions. Further, static analysis tools enhance the process to make C++ code more stable and then maintain that stability at any scale, which objectively improves the quality of the product. However, this is not to say nothing should use Blueprints outside of prototyping. Data-only Blueprints are still the best way to get asset references from the editor into something you can access in C++. Extremely simple event driven logic, like that in most UI implementations, is also fine to do and leave in blueprints. This is also great, because UI is most iterated on by artists and designers - not developers - making blueprint a great choice for them to use.

In terms of gameplay implementation and not data-only use cases, Blueprints are best used for early iteration and prototyping. For example, when experimenting with how a feature looks and behaves. When that feature gets the sign-off, it should be converted to C++ immediately so it can benefit from all the aforementioned benefits, because it will no longer benefit from the rapid iteration speed of blueprints. It is true that sometimes a feature will get revisited for iteration much later after it was signed off on - in which case, just iterate again in blueprints then move it back to C++ when it’s determined to be the required implementation. Again, only features that are very simple and not that important to gameplay should be left in Blueprint. Everything that is important (even if simple) and/or even slightly complicated, should be moved to C++.

Side reference:

Here’s a great video which gets into the strengths and weaknesses of blueprints. The presenter, Zak Parrish, is a senior of Developer Relations and support at Epic Games who works with development studios to improve their use of the engine. With his experience from assisting studios and working with the team at Epic Games, he has the authority to talk about what is and isn’t a good use for Blueprints. I agree entirely with what he states, and many of my opinions are the result of applying his advice in projects.

Case Study: Device Compatibility & Google Play Settings

Without question, my favorite thing about the gaming community is the camaraderie with colleagues. It’s no secret that game developers of every level are often the real superfans of other independent game studios.

A great deal of the gaming community lives and breathes with this type of thought-sharing, feedback, and help. Every week, we receive questions from new friends and old, ranging from shared opportunities and game design feedback to troubleshooting and technical issues. Taking the more common, and thematic questions, and reusing them as blogs feels like a natural step for us, and broadens the number of developers we can assist.

The mini-case study below helps with a very small, but recurring issue that first game studios and game developers sometimes run into issues with: device compatibility settings.

It’s often the case with beginners who are managing a store upload for the first time to miss a few steps. One of the most common is by not correctly configuring the Device catalog.

If you look at the example below, you’ll see 15K+ devices supported from a project one of our senior developers uploaded.

Here, you’ll see a similar project in the same month, and same version of Unity:

With just under 5K devices supported, only 30% of the comparable above, something is wrong.

In this case, the Game App API level is set to 23+. This means that Android devices must have that level of update to ensure compatibility, download, and play the game app.

If you look at the package configured by the senior dev, you’ll see the difference:

Set the minimum API level to 19 within Unity Player Settings, and you are good to go.

This confusion often starts because the Google Play upload dashboard will say that it requires the most recent API level to be uploaded. You do not have to set the minimum API level to be the latest.